Choosing between two treatments (Markov Decision Process)

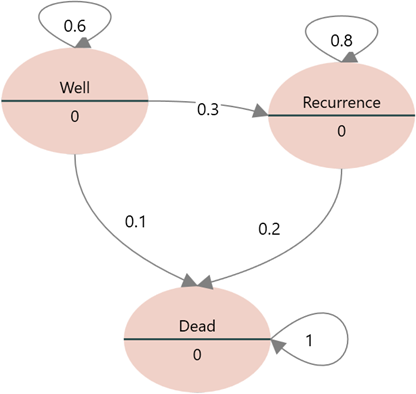

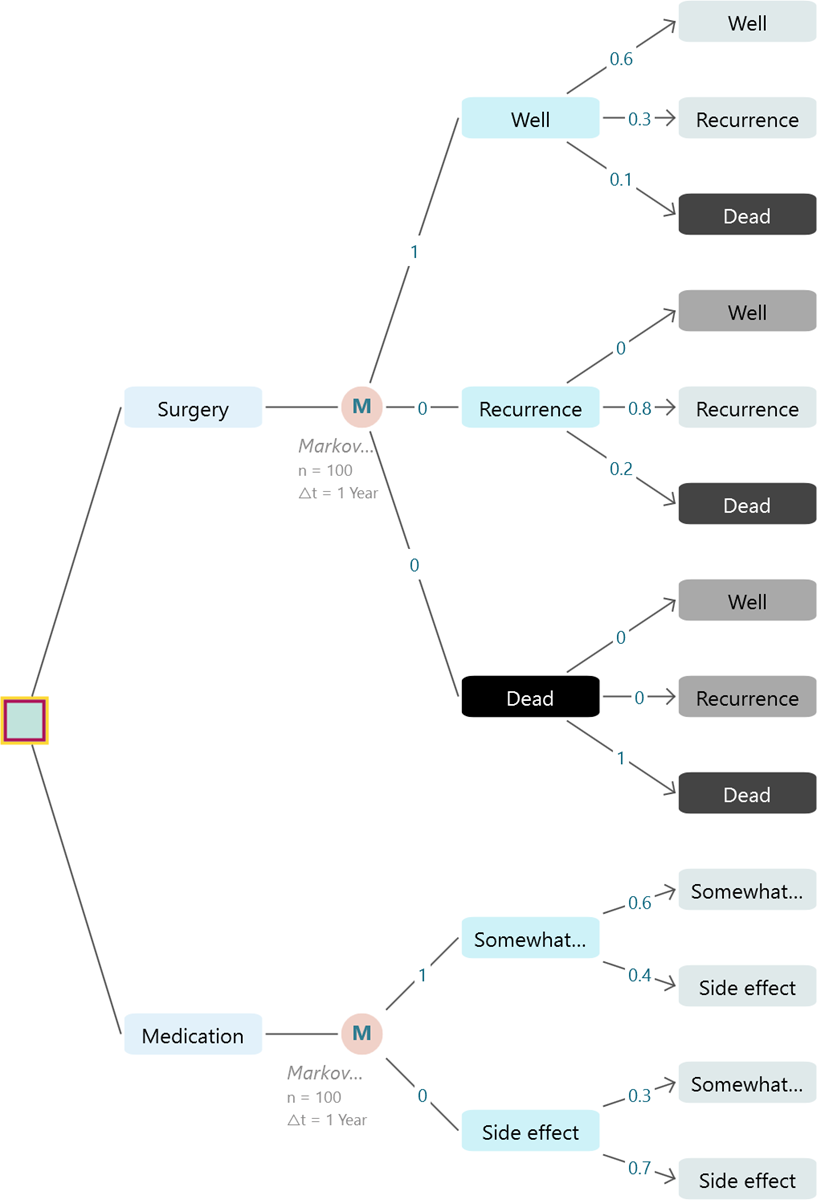

In this example, I will show you how a Markov Chain can be integrated into a Decision Tree so that two Markov chains will represent two treatment options, and based on expected values, we can choose which option is the best strategy. We will consider two options. Surgery and Medication. If you choose surgery, then the patient can go thru 3 states over the next 10 years.

"Well", "Recurrence", "Dead"

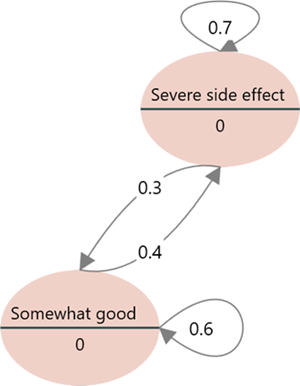

If you choose to stay on medication, then you may go thru the following 3 states over the next 10 years.

"Somewhat good", "Severe side effect"

For Well state, you get a 0.89 QALY. For the Recurrence state, you get 0.5 QALY. For Dead state, naturally, 0 QALY.

For a somewhat good state, you get 0.68 QALY. The severe side effect QALY is 0.2.

The transition probabilities are shown below.

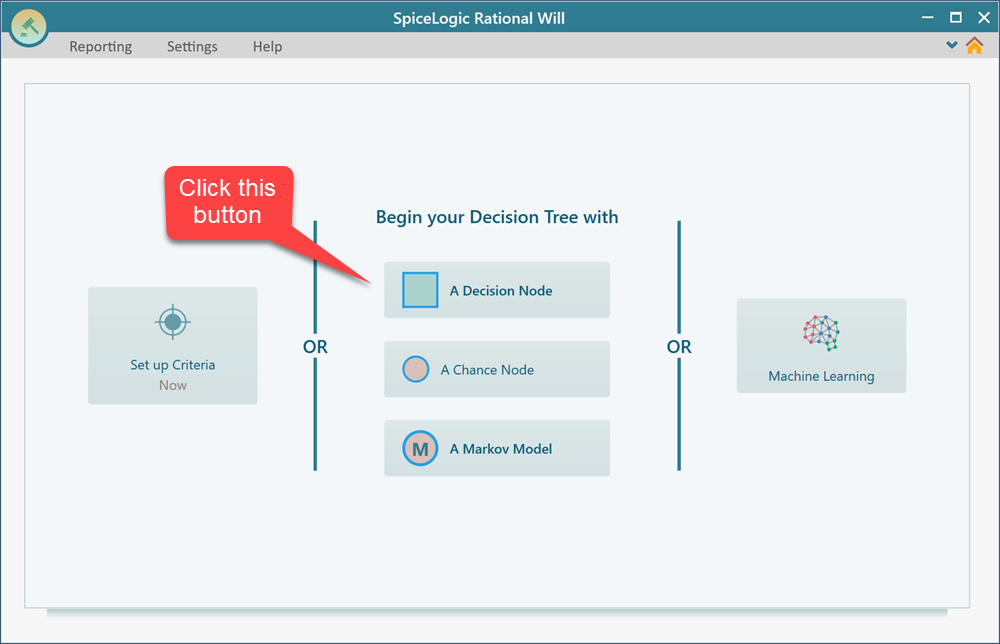

Let's start with the Rational Will or Decision Tree software and choose the Decision Node as the root node.

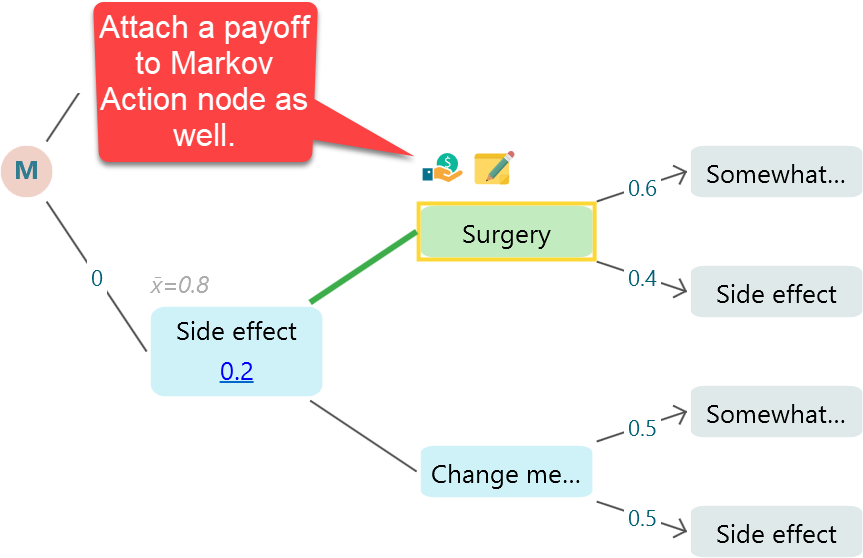

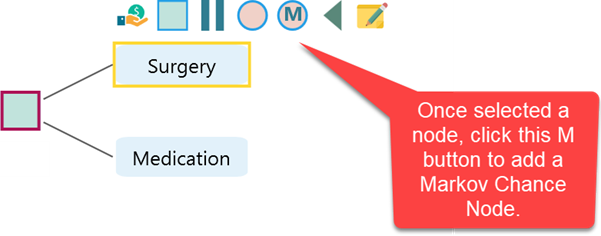

Then create two actions as shown below. And after that, add a "Markov" node to the "Surgery" node, as shown below.

As shown above, attach two Markov Chance nodes to both of these actions. As soon as you click the "Markov Chance Node", a wizard will start. And then, as you learned in the QALY and Cost with Markov Model, complete the Markov chains as shown below.

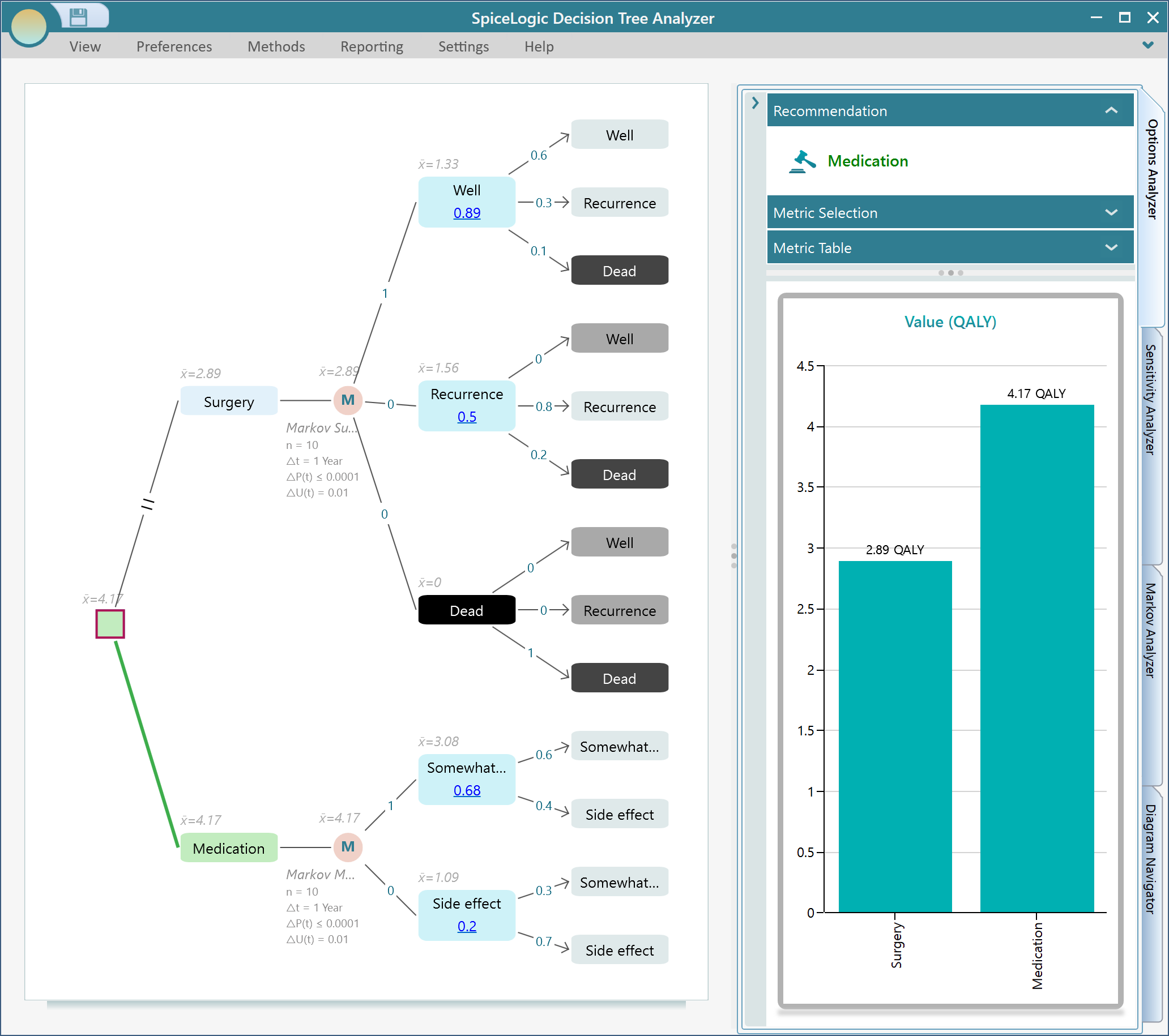

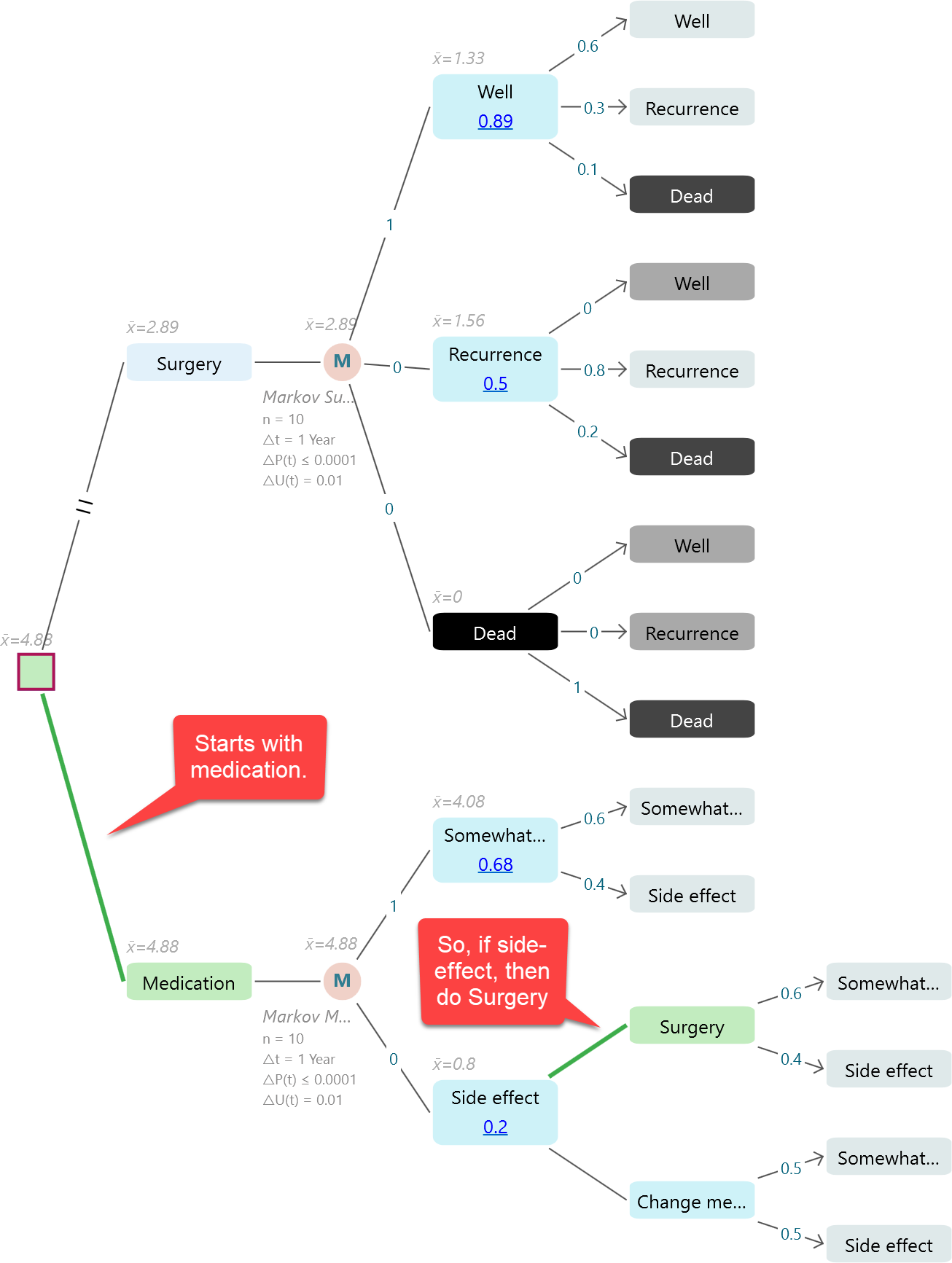

Now, set the payoff for each state. And you will find the decision tree completes the simulation and show you that Surgery gives you 2.89 Expected QALY over the next 10 years. The medication option gives you 4.17 QALY for the next 10 years. And then, the decision tree shows a Green color path indicating that Medication is the best option.

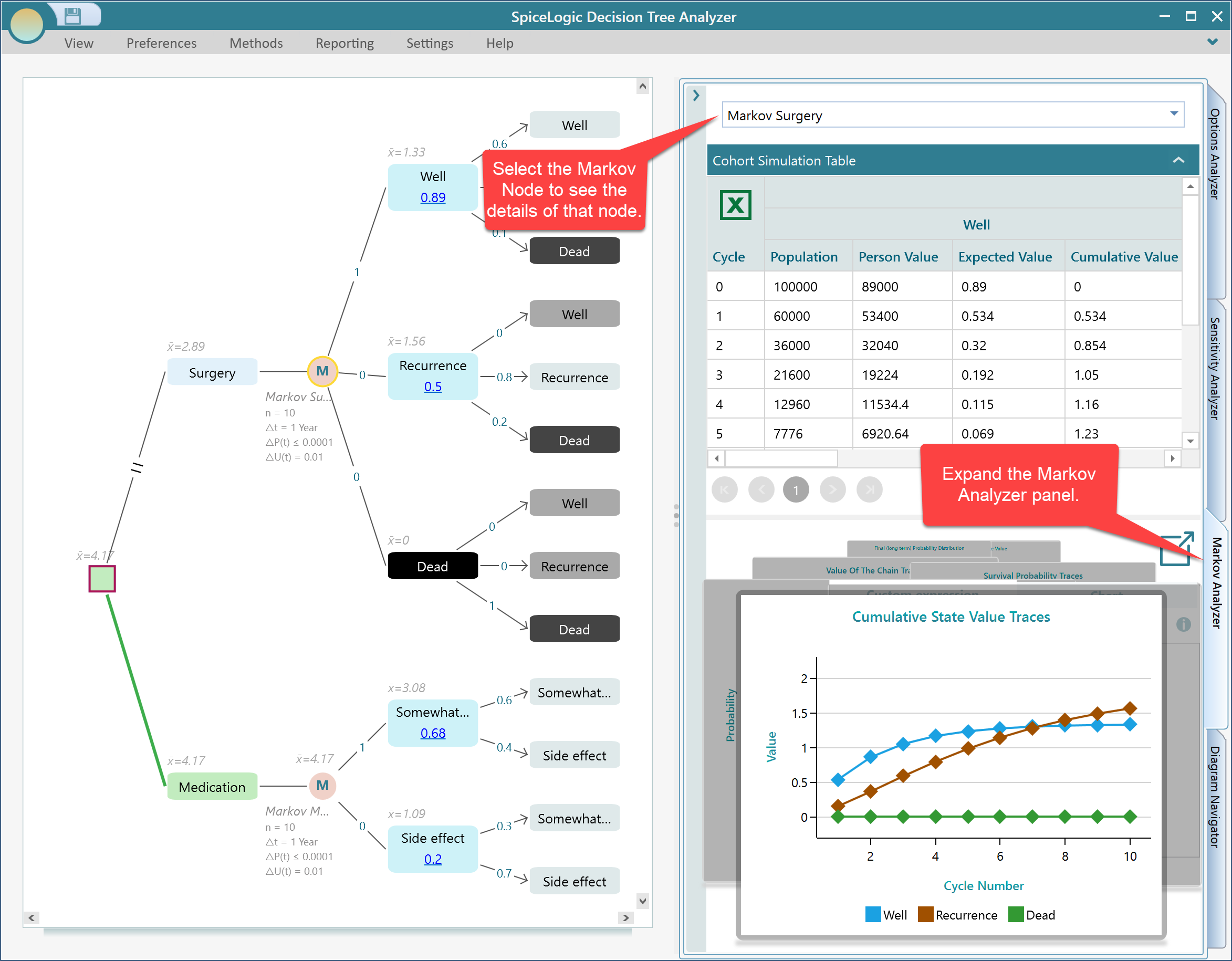

Expand the Markov Analyzer panel and select a Markov Node to see the details of that Markov node.

Extending to Markov Decision Process

Ok, think about it. Say if you get into severe side effects from the medication. At that stage, you may have two options. Perform surgery. Change medication, And based on the action you take, you have a different transition probability. Don't you want to know what would be the best policy for the Side effect state? Yes. You are in good hands. The SpiceLogic Decision Tree software lets you model a Markov Decision Process. Markov Decision Process is a Markov modeling technique where you can model various actions under a state and find a policy for that.

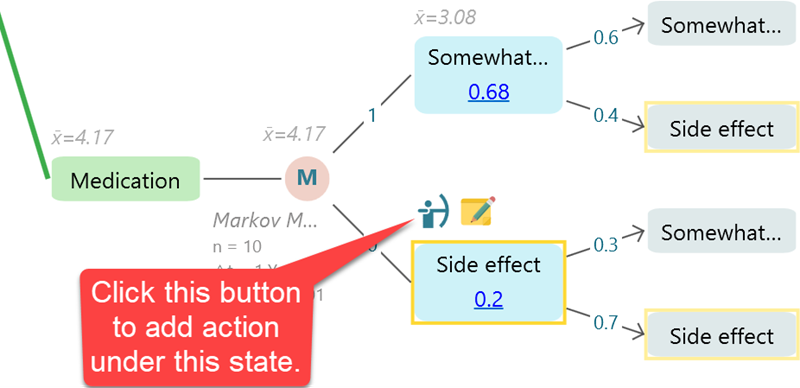

Let's do that. Select the node "Side effect" and click this fly-over menu item to add actions. Create two actions named "Surgery" and "Change medication".

Now, see the magic. The software has performed a calculation and figured out that, if you undergo surgery at the state "Side effect" then that will give you the best overall QALY. So, you got a policy. The policy is that,

1. Start with Medication.

2. But, if you encounter severe side effects, then undergo surgery.

The surprise does not end. Guess what! Same as you attach a payoff to a state, you can attach a payoff to your Markov Action as well. Your payoff on Action will be considered when calculating the final QALY. For example, your Surgery can cost a high price, and changing medication can be cheap. You can use Cost-Effectiveness type payoff and associate cost for each action.