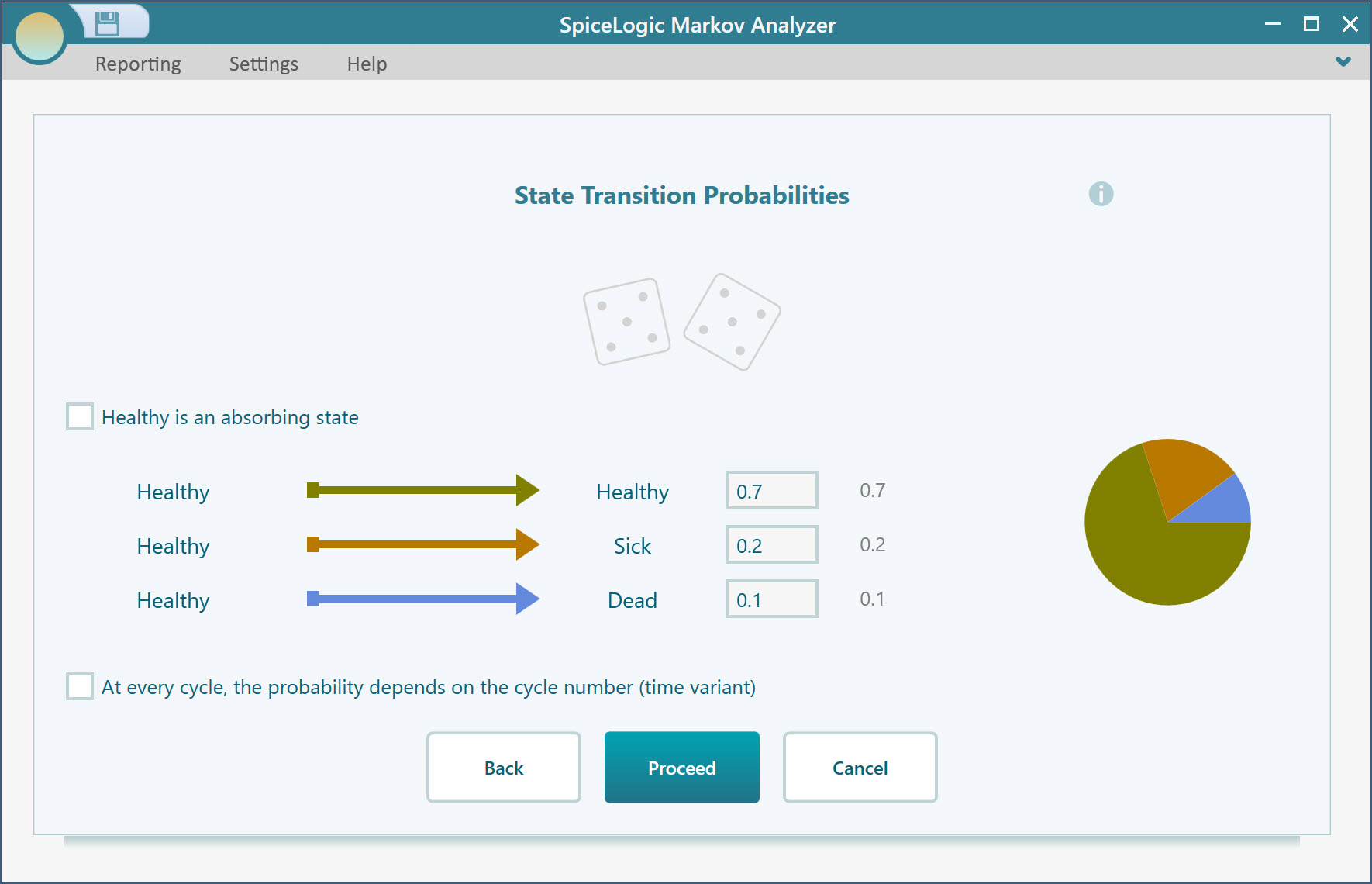

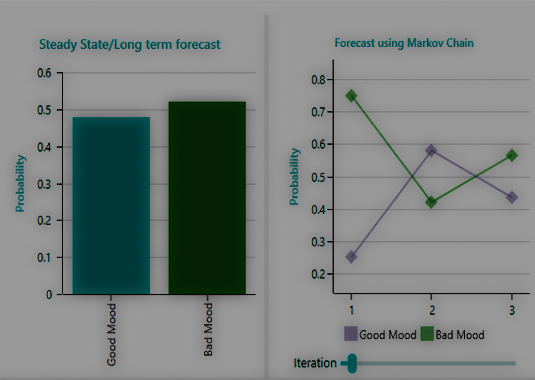

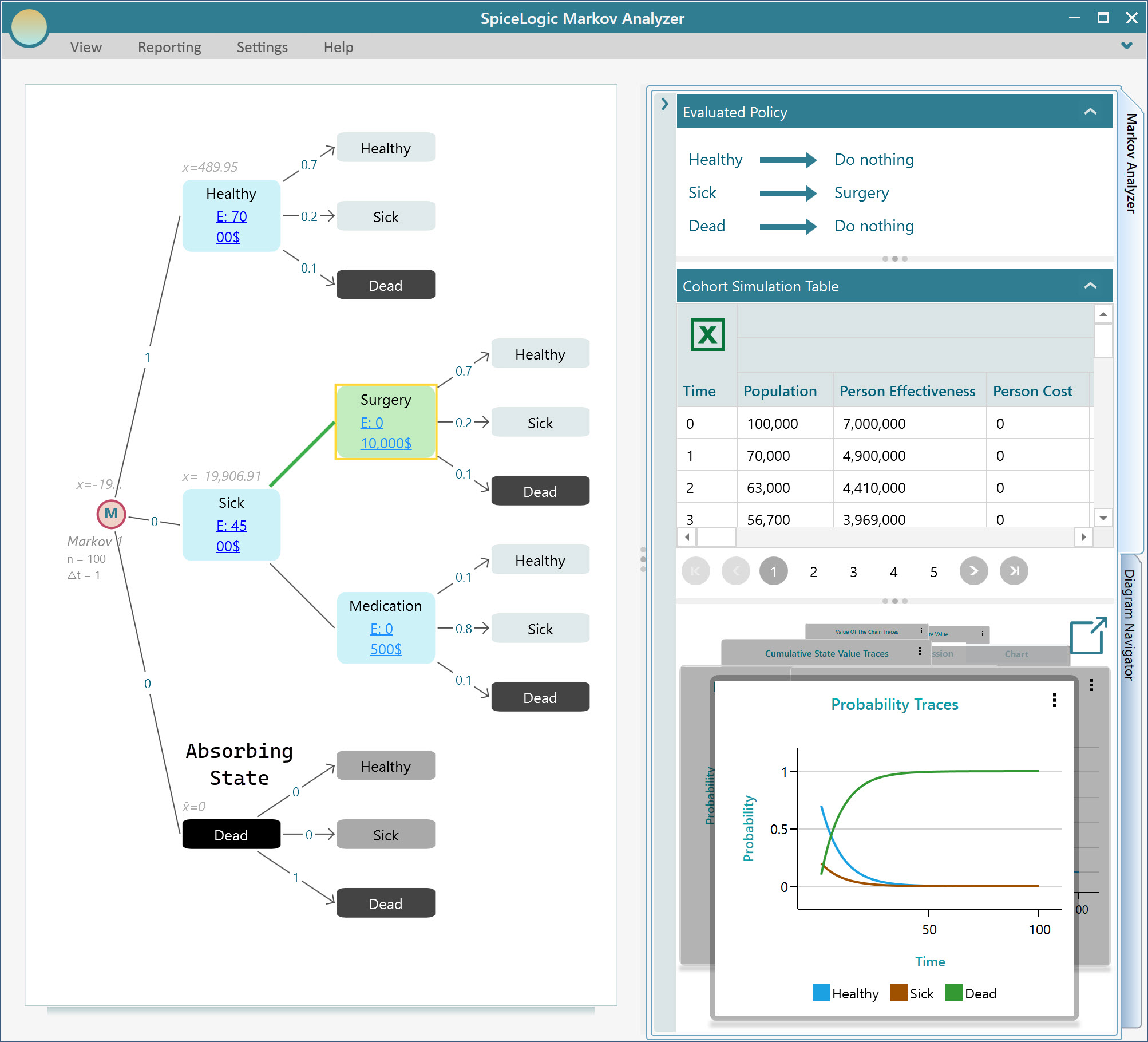

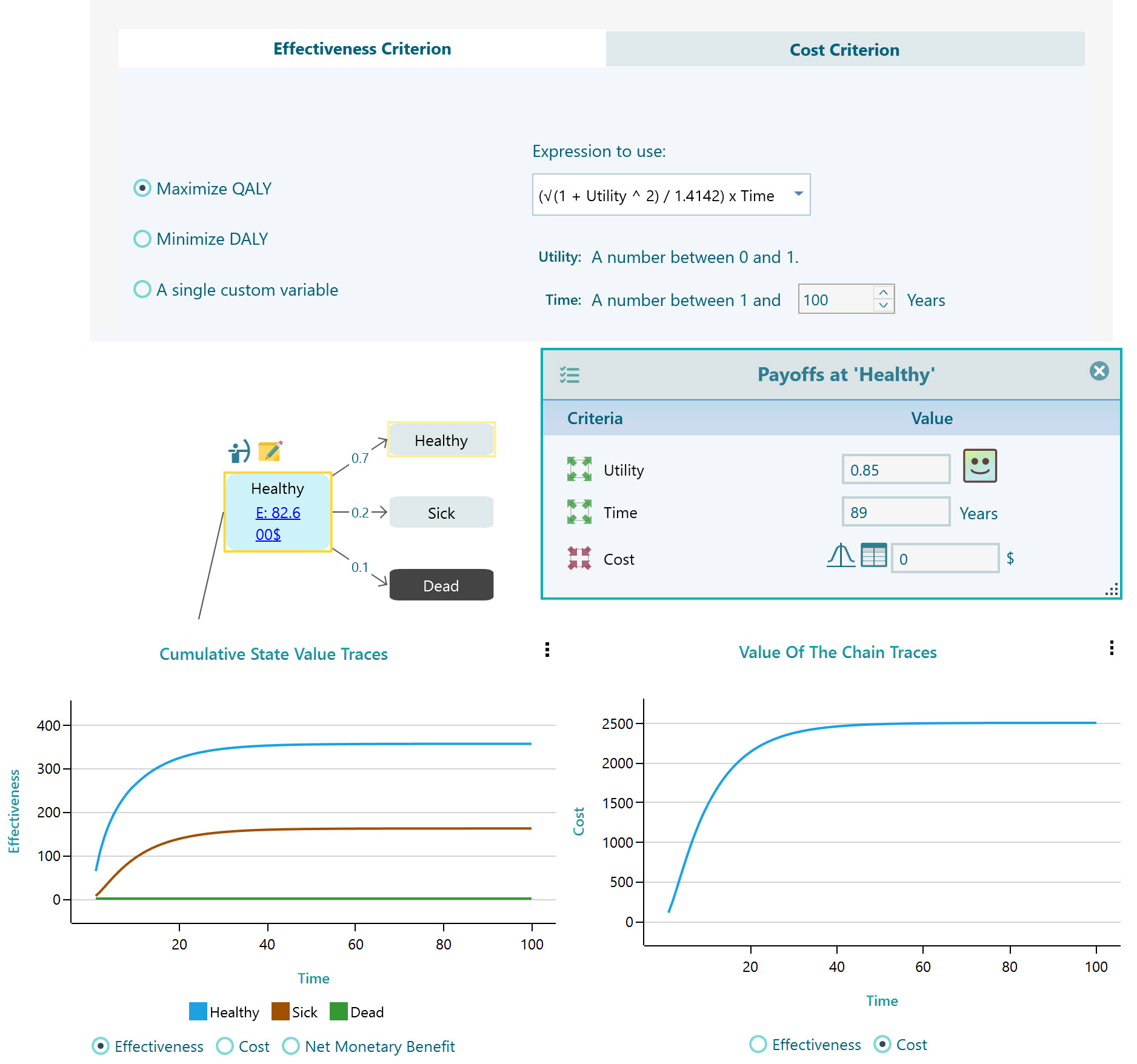

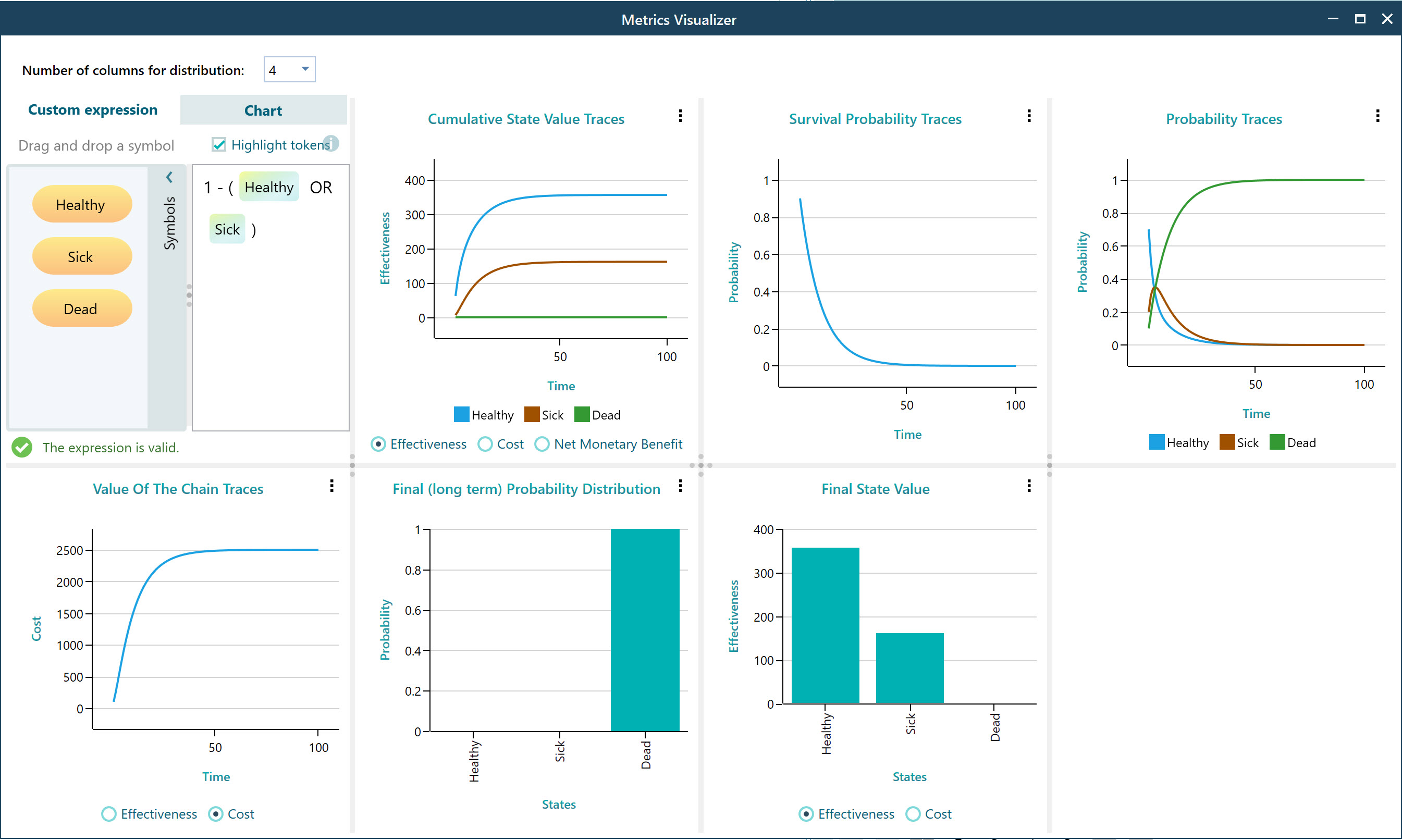

Model Markov Decision Process with the modern and intuitive wizard

Markov Decision Process from SpiceLogic offers a very rich modeling application. It starts with a wizard that captures all the necessary information from you to create the model.